Web Services is arguably one of the most significant advance in enterprise software systems in the last 5 years. Web Services have provided a mechanism to unlock and expose data and business logic buried within legacy systems in a uniform and standardized fashion. This is significant because most enterprises use a multitude of different software systems for various operational and strategic functions. These systems tend to be very different in their architectures, platforms, programming languages, interfaces and data stores. Web Services has made it is possible to have a single standard mechanism to access all systems.

Business processes in enterprises often involve accessing more than one system and require information flow among different systems. With Web Services-based standardized programmable access to all systems, we now have an opportunity to create so-called "composite applications" which allow seamless transfer of data and invocation of business logic across multiple systems.

SOAP is an important standard for Web Services. While the simpler REST-based Web Services are popular, SOAP is important in Web Services where security and reliability are important. For both SOAP- and REST-based Web Services, XML is the de facto standard for the format in which data is produced and consumed by services. This text-based format with a standardized and well understood structure is one of the reasons for the easy adoption of Web Services.

While Web Services is a great choice for enterprise system integration, it is also widely used where it probably shouldn't be. The primary scenario where Web Services is not the best choice is when it is used to integrate sub-systems within a system built by the same vendor, and in particular when there is a large amount of data transfer among these sub-systems. Often sub-systems owned by different development sub-groups are built independently without attempting to standardize on common infrastructure and inter sub-system service contracts. This is typically a result of development groups growing unmanageably large (see A Few Good Men) and sub-groups inherited from mergers and acquisitions. Each sub-system tends to adopt its own architecture, frameworks, metadata and data formats, and access mechanisms, ending up looking very different even though they may all be part of the same product suite sold by the same vendor. When it comes time for these sub-systems to talk to each other, the challenge is easily addressed with the magic words - Web Services. Each sub-system builds a Web Services layer and now they can all talk to each other fine. Problem solved. In reality though, there is a great deal of inefficiency in this approach. Often these sub-systems live in the same runtime and communication among them does not involve crossing process boundaries. In these cases, what should be simple co-located service API calls exchanging objects between sub-systems, becomes an inefficient process with unnecessary penalty of protocols such as SOAP and the overhead of XML processing. XML is by nature verbose and parsing of XML is expensive even with modern parsers. When the volume of data is large, the performance overhead of object->XML->Object transformations can be very significant. Data exchanges in binary format are typically orders of magnitude more efficient that text-based formats. This study illustrates graphically a benchmark that support this argument.

The need for loose coupling among sub-systems is often cited as a reason for integrating them through Web Services. The specious argument goes like this - if two sub-systems expose APIs and share object models, any change to one sub-system will require re-compilation of the other which is undesirable. However, what is often overlooked is that even with Web Services, if the format of the XML data produced by a service changes, the consumer of the XML has to re-work the business logic code requiring re-compilation and re-deployment.

The right paradigm for designing systems is to adopt the M13 services paradigm. All business functionality is thought of in terms of services. Services do not necessarily mean Web Services. One defines Java service interfaces that produce and consume serializable Java objects, and builds their implementations. This should be independent of how the services are exposed and invoked. An external declarative provisioning mechanism is available that dictates the best mode of service consumption - plain Java APIs, Web Services, EJBs etc. The underlying infrastructure insulates the author of the service from the provisioning and lets him/her focus on the service contract and business logic. It also allows for employing the optimal service invocation mechanism depending on the scenario. Of course, this requires all sub-systems to conform to a common service authoring paradigm such as M13.

Web Services are great for exchanging small targeted data between disparate systems. It may be the wrong choice when you have full control over systems on either end of the wire. In those cases, consider more efficient alternatives.

Sunday, December 30, 2007

Checked versus Unchecked Exceptions in Java

There has always been a debate in the Java community about checked versus unchecked exceptions. Java purists would argue that unchecked exceptions should be used only for conditions you cannot recover from at runtime. On the other hand, in recent years the thinking has shifted a bit (or is it muddled a bit?) with successful frameworks such as Spring promoting heavy use of unchecked exceptions. In the case of lightweight frameworks such as Spring, one of the principal value proposition is the fact that you write very little code to get your job done. Use of unchecked exceptions helps promote that value because you don't need to have try/catch blocks in your code or be forced to declare the exceptions thrown by a method. I believe a significant percentage of software applications are lightweight in nature (see Who produces the most applications software?) with their creators looking for simple, lightweight frameworks such as Spring to get the job done. This is the reason for the widespread adoption and success of such frameworks. For lightweight applications, the use of unchecked exceptions works just fine.

As you move up the chain to more complex applications, the reasoning on exceptions changes. When you have an application with many sub-components written by different groups containing hundreds of thousands of line of code, there is more of a need for rigor and discipline. Building complex software applications is still very difficult and costly. One of the biggest cost in such systems is diagnosing and fixing bugs after the software has been shipped to, and deployed at customer sites. For such systems anything that helps reduce the chances of code problems at development time, or helps diagnose problems after deployment is of immense value. Checked exceptions help towards that goal. Checked exceptions are needed to ensure compile-time detection and enforcement of handling abnormal conditions. Checked exceptions force the developer to think through exception scenarios and code in appropriate exception handlers. These handlers can take appropriate action such as logging state at the time of occurrence of the exception for easy error diagnosis, freeing of any resources for avoiding leaks, applying some corrective business logic such as re-initializing state, or re-casting the exception to fit a service contract. With unchecked exceptions where there is a handler for all exceptions at the top level of the application, all this is not possible.

The natural question that comes to mind is of course - what is considered a complex application and what is considered a lightweight application? Some applications clearly fall in either ends of the complexity spectrum, but many applications fall in a big gray area in between. Complexity also tends to a relative term - complex for one development group may be simple for another. For the majority of such applications in the middle, there is no formula that can be applied to pick the right choice of exception management. The correct approach involves a judicious mix of both checked and unchecked exceptions. I tend to follow the following rules:

- Use checked exceptions for coarse service contracts to external clients you have no control over. Exceptions are part of the formal service contract you are publishing to your clients.

- Use checked exceptions for situations that are likely to occur due to invalid input to your services e.g. client called a login service without providing a user id.

- Use unchecked exceptions within your own service implementation code that performs very basic operations, is exercised very frequently, and where exceptions are unlikely to occur unless there is a very basic flaw in the programming logic. e.g. indexing into a list with an index that is out of bounds. Imagine how painful our code would be if the java.lang.IndexOutOfBoundsException exception of the widely-used java.util.List::get(int) was a checked exception! In fact I use the List analogy and ask myself if the exception scenario is at the same level as the List indexing example. If it is, I use an unchecked exception; if not I use a checked exception.

- Use unchecked exceptions for conditions from which the program cannot or should not attempt to recover e.g. running out of system memory.

Who produces the most Applications Software?

If I asked you who produces the largest amount of business applications software, you'd probably say Oracle, SAP, Microsoft, IBM or one of many software vendors that produce and sell such software. Until recently I would have agreed with you completely. But I'm beginning to realize that the maximum amount of applications software is produced by IT groups within companies and not by large software vendors. Although companies spend millions of dollars on buying software applications to run their business, they also produce a lot of code internally for their own consumption. This could be enhancements or customizations to applications they purchased because the purchased software did not meet their business needs out-of-the-box. It could also be brand new applications they build specific to their business for which no commercial software is readily available. Most of these applications tend be simple (and interestingly short-lived) since IT groups tend to be very constrained in terms of budgets and skills to engage in full-fledged, large-scale software development. However, given the massive number of companies globally that use software for their daily operations, the total number of such applications is very large. Interestingly, it is because of this large market for simple, light-weight applications that light-weight frameworks such as Spring have gained a lot of popularity.

So who produces the most applications software? Combined IT groups of companies do.

Saturday, December 29, 2007

A Few Good Men

One of the classic problems in software development is to accurately estimate how many developers are needed to complete a task. In my 15+ years of commercial software development in small and large companies, I've yet to come across a project where the resource estimate at the beginning of the project ended up being anywhere close to being correct. Invariably the estimate is much lower than what it actually takes to complete the project. Why is this? I think it is because some of the intangible aspects of developer efficiency are overlooked in the resource estimation process.

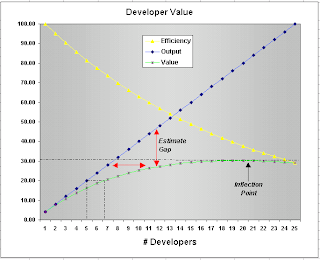

What is the optimal size of a developer group engaged in a project with shared, common, inter-dependent tasks? I believe the optimal size is 1! One brilliant developer with all the required skills produces software in the most efficient fashion. Of course, it is going to take forever for a team of one developer to complete any reasonable size project, and obviously most teams need to have more than one developer. My thesis is that with every additional developer, efficiency of the group decreases by 5% over the previous level. So if a team of 1 developer works at 100% efficiency, a team of 2 developers works at 95% efficiency (0.95 x 100), a team of 3 developers works at 90.25% efficiency (0.95 x 95), a team of 4 developers works at 85.74% efficiency (0.95 x 90.25), and so on. This is shown graphically in Figure 1 below where the yellow line indicates the decrease in efficiency level as the number of developers increases.

Figure 1. Developer Value

Why is it that efficiency decreases as the number of developers increases? The decrease is due to many factors. The principal factor is related to source code dependency and version management. When a group of developers share a common code base, there is invariably inefficiencies related to:

- module inter-dependency ("my change broke his code"), and

- code sharing ("I have to rebase to his changes first before delivering my changes to the same file").

- difficulty in ensuring a common understanding of the project design goals and principles ("I thought you meant this rather than that 3 months ago"),

- differing developer backgrounds, styles and skill levels ("this guy's code is so cryptic, I'd rather re-write it than try to figure it out"), and

- the increasingly distributed nature of development with language, culture and time-zone related communication problems ("wish I could quickly and easily get this guy in Bangalore or Minsk to understand exactly what I mean")

As the number of developers increases, the output obviously increases. This increase is linear and is shown graphically in Figure 1 as the blue line. However, this output does not factor in the cost of inefficiencies mentioned above. The inefficiencies introduce a cost penalty that needs to be factored in to the calculation of true "developer value". This value is shown in Figure 1 as the green line. Note that the rate of increase of developer value is lower than the rate of increase of developer output as the number of developers increases. Resource estimates are typically based on the output curve, when in fact they should be based on the value curve. The difference between the output curve and the value curve is called the "estimate gap" and is indicated by red double-arrow lines between the blue (output) and green (value) curves. Missing this estimate gap is the reason for poor and lower estimation of required resources for projects.

So what does this value curve mean? Let's say you estimate that you need 5 developers to complete a task based on amount of code that needs to be written. In Figure 1, assume that the y-axis is re-scaled so that 20% output is 100% for your task - so 5 developers are estimated to be required to complete 100% of the task. From the output and value curves, you actually need 6.7 developers when you factor in the estimate gap. The table below shows the mapping between estimated number of developers based on output and required number of developers based on value. This mapping can be used to arrive at more accurate resource estimates without just going with the incorrect estimate based on output.

| # Developers Estimated (Output) | # Developers Actual (Value) |

| 1 | 1 |

| 2 | 2.1 |

| 3 | 3.3 |

| 4 | 5 |

| 5 | 6.7 |

| 6 | 9 |

| 7 | 13 |

An interesting aspect of the value curve is that there is an inflection point around 21 developers (indicated in Figure 1) after which the value actually starts decreasing! This means that a group of 21 or more developers working on shared, common, inter-dependent project tasks is likely to be a loss making proposition. In terms of estimated number of developers based on output corresponding to 21 developers based on value, the number turns out to be between 7 and 8. This means that if your estimate arrives at more than 7 developers based on output (which is more than 13 developers based on value), you run a huge risk of failure. In such a scenario, re-visit your project tasks and try to break it up into more independent sub-projects. If that is not possible, you are dealing with an immensely complex software system that given today's state of the art, is impossible to build effectively. Hopefully such systems are rare.

So for your next project, bridge the estimate gap to arrive at better resource estimates, and remember the magic upper limit numbers - 7 and 13.

Monday, December 3, 2007

"Tear-off" from online Flash App to offline AIR App

Say you have an interactive, connected, online, Browser-based Flex application that is used for scenario-based analysis. Users of this application need to be able to "tear-off" a scenario and work on it offline in an AIR application. How does one achieve this and re-use the same code written for the online application? The following outlines an approach:

Once downloaded, the AIR application (containing the exact same rendering code as the online application) reads this data file to de-serialize the byte arrays as ActionScript metadata and data objects, and renders the scenario view.

Works, but ugly and convoluted! Got an alternative?

- Online Flash Client requests metadata and data from the server needed to render scenario view

- ActionScript metadata and data objects are delivered to client from Java versions on server using FDS remoting (AMF3 over HTTP)

- Client creates a byte array of these objects in some pre-defined sequence and sends this to the server using URLLoader

- Server writes these bytes into a temporary file on server disk

- Client uses FileReference::download() to download this file to the client machine

Once downloaded, the AIR application (containing the exact same rendering code as the online application) reads this data file to de-serialize the byte arrays as ActionScript metadata and data objects, and renders the scenario view.

Works, but ugly and convoluted! Got an alternative?

Subscribe to:

Posts (Atom)